Digital Shoestring Student Projects - DIAL Newsletter Winter 2019

By Alan Thorne, Senior Technical Officer

As you will have seen in recent correspondence we have successfully kicked off the Shoestring low cost automation project. To support Shoestring kick off activities we asked our manufacturing master’s students to investigate three stretch activities within the Manufacturing systems / Robot lab module. The areas of investigation included camera based tracking, augmented reality and audio based control. During the Manufacturing systems / Robot lab module the students design, build and integrate a gearbox production line making it an ideal testbed for students to explore these topics.

Camera Based Tracking System

The goal of this project was to provide a low cost vision based tracking system that could be used to identify and track different shuttles, used in transferring of parts around the production system. The developed system included a 720p web cam, Raspberry PI and open source vision software from OpenCV. The camera was installed looking down onto a junction point of the conveyor system where shuttles are diverted from the main feeder loop in and out of an operational side loop as needed. The idea being that if the solution worked well at a single junction point it could be duplicated at other junction points to provide a comprehensive tracking solution.

The OpenCV software was configured to identify different RGB levels of colour markers that had been placed on each of the six shuttles. It also provided X, Y values for identified colour markers as they pass through the webcams view. The X, Y values were used to determine if the shuttle was staying on the main loop, diverting onto the sub loop, re-joining the main loop or being circulated on the sub loop.

The implemented solution worked well, although significant effort was required in optimising the OpenCV code to gain high enough image sample rates so that the direction of the shuttle travel could be determined. This also involved reducing image capture resolutions and compartmentalising the image capture area to allow basic direction of travel analysis to be undertaken. (Note Shuttle speed 250mm/Sec.) A lot of effort was also required in providing stable lighting levels as this had a big impact on sensitivity on RGB colour detection. In future deployments it would be better to use shape or block identification as this would be less sensitive to this type of problem.

![]()

Augmented Reality

The goal of this project was to provide a low cost Augmented Reality (AR) system that would run on a mobile device. When equipment is being viewed by the AR app, specific status information about the equipment can be overlaid onto the video stream. (E.g. Running, Stopped, Error…) The developed system included an Android phone running both Vuforia and Unity software components that were integrated to provide the AR app. Vuforia handles the identification of AR targets and Unity displaying graphics on a live video stream. A Raspberry PI is used to capture PLC system information and host it on a web server that can be seen by the mobile AR app. AR targets were printed and placed on the different pieces of equipment so that the AR app could identify them.

The AR app is configured to look for AR targets, once identified an information panel is displayed on the live video stream relative to the target location showing the name of the equipment and the status of the equipment. The colour of the information panel is updated reflecting the status of the equipment. (E.g. Green-Working, Red-Stopped, ….) Status information is gained via web request from the Raspberry PI. In this implementation the Raspberry PI was deriving the status information by monitoring PLC I/O, as would be the case in a light weight piggy back solution.

The implemented solution was very effective and very responsive when being used by an operator. The system could easily be extended to further pieces of equipment within the production system but care has to be taken in the design and positioning of the AR targets. The size of the targets has a big impact on the read range that is possible with the AR app. Ideally the target size should be no smaller than a 10th of the read range. (This is fairly big!) It was also found that the targets didn’t work effectively when being wrapped around curved surfaces. Richer data messages could be displayed on the app if the Raspberry PI was better integrated into the control system.

Audio Based Control

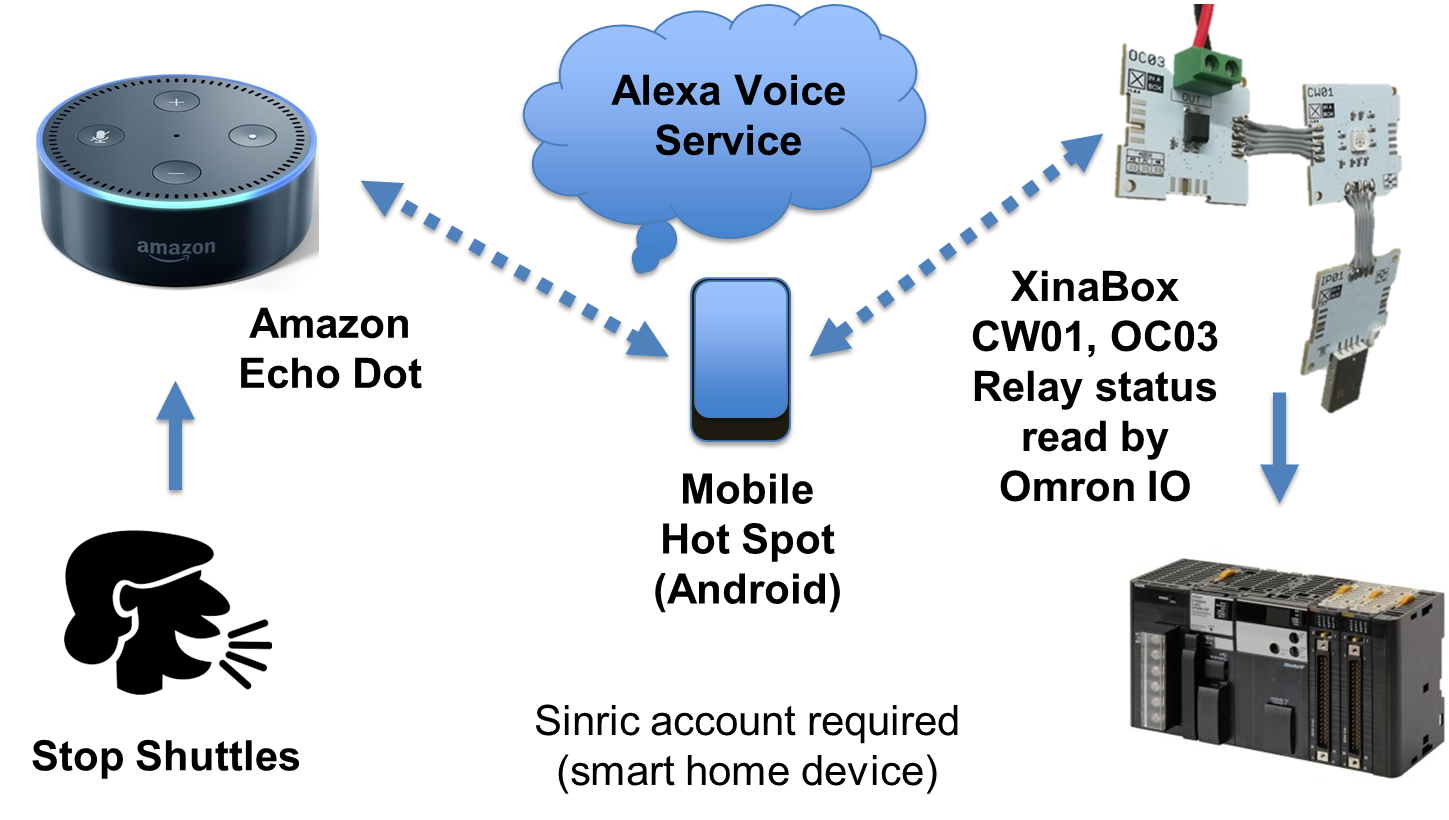

The goal of this project was to provide a low cost solution that would enable audio based control of one of the production elements. Of course audio based control in an industrial environment could be very problematic and controversial but with the development of technologies such as Amazon’s Alexa we were keen to see what is possible. The students picked a conveyor control application, where you could request the shuttles to stop and queue at an initialisation position. The developed system included an Amazon Echo Dot to access Alexa voice recognition software, Xinabox WiFi relay IO module and an Android phone to provide a WiFi hotspot.

The Echo Dot was set up with an Amazon account to enable the Alexa service. A third party service ‘Sinric’ skill was added, enabling Alexa to discover the XinaBox IO module on the local WiFi hotspot provided by the Android phone. Voice commands could now be used to change the state of the IO module (E.g Alexa, turn Cell 2 LR Mate on).

The fundamental components of audio based control system were developed during the lab activity and they worked well but they were never integrated into the operation of the conveyor system. One of the challenges that was discovered during this project was getting verbal feedback from Alexa to confirm that an action had taken place “closing the loop”. To achieve this another third party service ‘NotifyMe’ skill was added, enabling a notification to be sent to Alexa via the RESTful API with a POST request.

Date published

29 January 2019